Short bio & research interests

I am an associate professor at the Internet Technology and Data Science Lab (IDLab), Ghent University - imec, Belgium. Until a few years ago, I was mainly working on natural language processing (NLP), but I've always been more interested in the underlying machine learning methods and models than the natural language applications themselves. As soon as I was appointed assistant professor in 2019, I've initiated an AI research track that goes beyond NLP, with health as a key application area. I've been very fortunate to gather a group of highly talented PhD student working in that direction, and I'm grateful to the Research Foundation Flanders for funding many of them.

The research tracks we recently engaged in, include non-conventional AI techniques (with a recent paper on Hopfield Networks and Deep Equilibrium Models, as well a breakthrough in simulating deeper predictive coding networks than was possible before), neuro-symbolic AI (with recent work on clinical reasoning as well as learning treatment policies on combined tabular and textual clinical data). In collaboration with Luca Ambrogioni from Radboud University, we've looked into dynamic guidance strategies for diffusion models (with a paper on negative dynamic guindance at ICLR 2025, and on feedback guidance at NeurIPS 2025), and with Stijn Vansteelandt and the Syndara team at the UZ Ghent hospital, we've worked on the inferential utility of synthetic medical data (with papers at UAI and NeurIPS 2024).

We've spent considerable time and effort over the last year on exploring the state of the art and beyond in AI for drug design, especially from the perspective of powerful protein language models and diffusion models. We've set up several collaborations in this area, with imec, and a number of Flemish biotech companies. Given the available budget and our ambitions in this area, I am focusing most of my time on this newest research track. The goal is that our recent work on diffusion models, energy-based models, and neuro-symbolic approaches in the end come together in the application area of protein design.

Over the last decade, I've been building up and co-leading the Text-to-Knowledge research cluster with prof. Chris Develder. We worked on natural language processing (NLP) in general, for applications in several domains (the media, health, economics and law). Our current area of focus in NLP is conversational AI, with a strong emphasis on the use and development of neural language models. Some examples of recent work include the state-of-the-art biomedical sentence encoder BioLORD 2023, the transtokenization method for translating monolingual language models, the work on Anchored Preference Optimization and Contrastive Revisions for the alignment of generative language models, the ideas on highly efficient unsupervised dialogue structure induction, and the work on extreme multi-label classification in the HR domain.

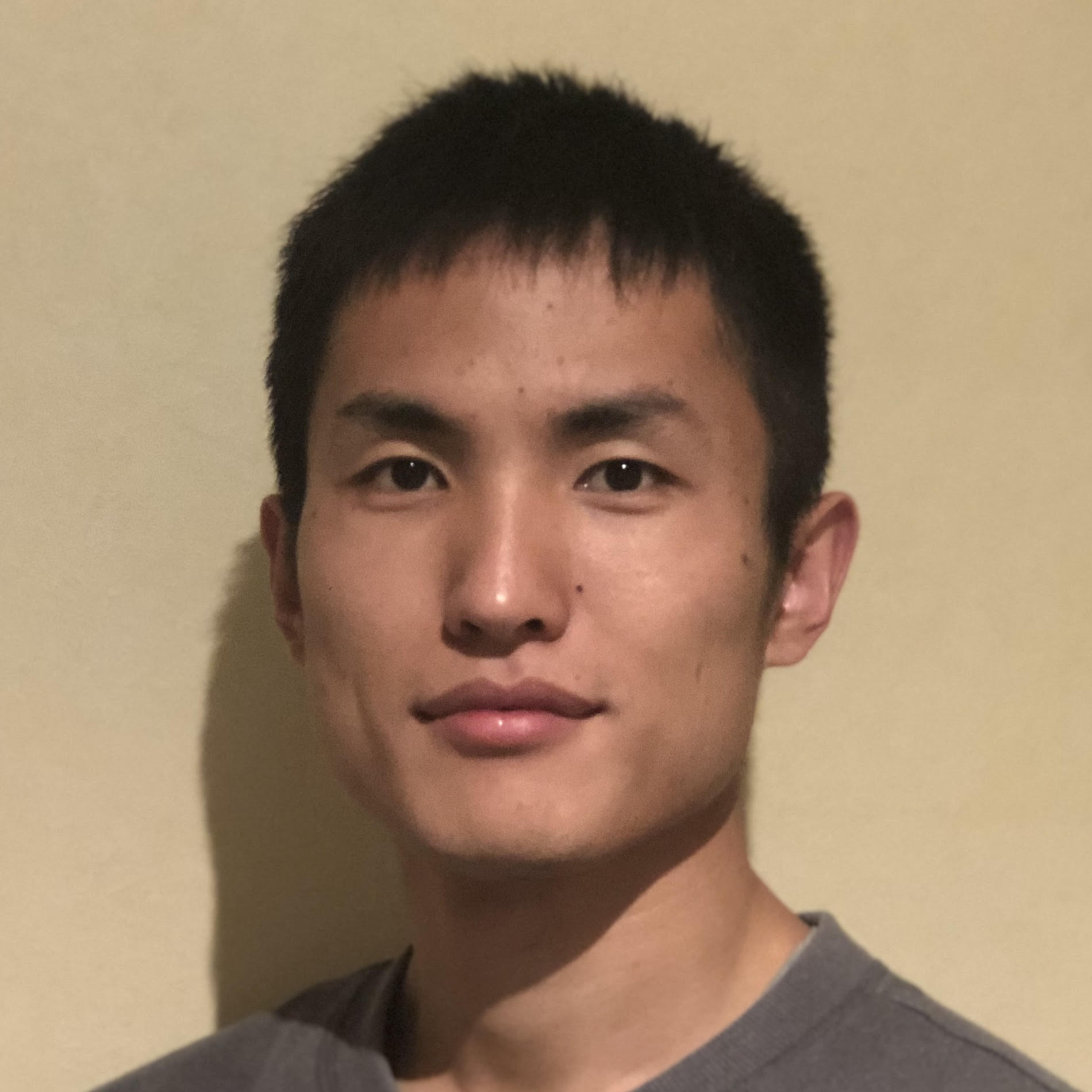

Here's where I come from. In 2005, I received my M.Sc. degree in electrical engineering at Ghent University, after finishing my final year and master thesis at ETH Zurich, Switzerland. In 2009, funded by a grant from the Research Foundation - Flanders (FWO), I obtained my Ph.D. at the Ghent University Department of Information Technology, under the supervision of prof. Daniel De Zutter, in the area of computational electromagnetics. Shortly afterwards, I got involved in research on Information Retrieval, in collaboration with the Database Group at the University of Twente in The Netherlands. As a post-doc, I was heavily involved in getting project funding and managing projects mainly in the media sector. Gradually, my interests moved to Natural Language Processing, and machine learning and AI in general. It was my research stay in 2016 at University College London, in prof. Sebastian Riedel's Machine Reading lab, that made me decide to continue my career as an academic researcher. In October 2019, I was appointed assistant professor at IDLab, Ghent University, co-affiliated with imec. Although the job description didn't change a lot (apart from some additional teaching - which I love), it turns out the freedom in research directions gives lots of satisfaction. That, and the great students that I've been lucky enough to attract.